Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Direct Download | App Store Link (coming soon) | Play Store Link (coming soon)

shdwDrive v2 transforms cloud storage into a decentralized ecosystem where users can not only store their files securely but also participate in and earn from the network. Our platform eliminates traditional centralized storage providers, replacing them with a community-driven network where everyone can contribute and benefit.

shdwDrive creates a marketplace where:

Users get reliable, secure decentralized storage

SHDW tokens allows to provide storage capacity

The community shapes the network's future

Everyone benefits from transparent, fairness

Want to dive deeper into how shdwDrive works? Check out our to learn about the network's economic model, proof systems, and mathematical foundations that ensure fair rewards for all participants.

Your complete resource for using shdwDrive v2 – whether you're storing files or earning rewards as a network operator. Now that we have launched our latest technology, stay tuned for frequent updates to our guides!

This section is being re-designed as we support the latest shdwDrive v2! What remains are previous versioning guides that focus on easy and actionable information to help you quickly get started building on the previous version 1.5 (soon deprecated) shdwDrive. Step by step guides, and line by line CLI instruction offer developers the quickest path to working concepts.

Much like an appendix, the reference section is a quick navigation hub for key resources. Our media kit, social media presence, and more can be found here.

This resource will be updated frequently and the developer community should feel empowered to for edits they would like to see or platform enhancement ideas. We also have a process for .

Read about shdwDrive v2 Economics

shdwDrive v1.5 is no longer maintained. Please migrate to v2 and consult the new developer guide for instructions.

Our SDK options provide the simplest means of linking your application to shdwDrive. With a variety of environments to choose from, developers can enjoy a robust and constantly evolving platform that leverages the full potential of shdwDrive. GenesysGo is dedicated to maintaining these SDKs, continuously enhancing developer capabilities, and streamlining the building process. We value your feedback and welcome any suggestions to help us improve these valuable resources.

Javascript

Rust

Python

shdwDrive v1.5 is no longer maintained. Please migrate to v2 and consult the new developer guide for instructions.

In order to use the S3-Compatible gateway for the shdwDrive network, head over to https://portal.shadow.cloud, connect your wallet and sign in, and then navigate to the storage account you'd like to generate an access key/secret key pair. Select the "Addons" tab and then you'll be able to enable & add s3-compatible key/secret pairs.

Once you've enabled the S3 Access addon for a given storage account, you can view and add more s3-compatible key/secret pairs by navigating to the storage account of your choosing on .

To get your credentials, simply click "View Credentials".

Once enabled, you can manage individual permissions of each key/secret pair you generate for a given storage account. The list of permissions are configured to be compatible with existing s3 clients. Some examples of compatible s3 clients are rclone, s3cmd, and even the aws s3 cli. There's a plethora of tooling that exists for s3-compatible gateways, which is why we chose to build this into the shdwDrive network.

In general, if you want to enable uploads with one of your keys, you'll need to enable the following permissions:

You can also control if a key is able to read, which allows you to have read, write, and read+write keys for various use cases.

In order to access the s3 compatible gateways on the shdwDrive network, you'll need to configure your s3 client to use one of the following gateways:

https://us.shadow.cloud

https://eu.shadow.cloud

These endpoints proxy requests to the shdwDrive network and allows you to have the best network connectivity possible given your geographical preference. All uploads are synced and available globally, so you can use either endpoint.

The following is an example that you can add to your rclone configuration file. Typically, this is located in `~/.config/rclone/rclone.conf`.

In order to ensure the stability of the shdwDrive network, speeds are initially limited to 20 MiB/s. You can opt to upgrade the bandwidth rate limit for each individual key/secret pair you generate. The upgraded rate limit is 40 MiB/s

In the event a s3 key has been compromised, you can easily rotate the key. Simply navigate to , connect your wallet, select a storage account, and then click on the "Addons" tab. From there, you can click "Rotate" button to rotate a given key/secret pair to generate a fresh pair.

After you install ShdwDrive, learn how to use the CLI client.

If you prefer, here is a step by step video walkthrough.

We adhere to a responsible disclosure process for security related issues. To ensure the responsible disclosure and handling of security vulnerabilities, we ask that you follow the process outlined below.

Please provide a clear and concise description of the issue, steps to reproduce it, and any relevant screenshots or logs.

Important: For security-related issues, please include as much information as possible for reproduction and what its related to. Please be sure to use the report a security vulnerability feature in the repository listed above. If you submit a security vulnerability as a public bug report, we reserve the right to remove the report and move any communications to private channels until a resolution is made.

Security related issues should only be reported through this repository.

While we strongly encourage the use of this repository for bug reports and security issues, you may also reach out to us via our Discord server. Join the #shdw-drive-technical-support channel for assistance. However, please note that we will redirect you to submit the bug report through this GitHub repository for proper handling and tracking.

Get Object, Put Object, List Multipart Upload Parts, Abort Multipart upload[shdw-cloud]

type = s3

provider = Other

access_key_id = [redacted]

secret_access_key = [redacted]

endpoint = https://us.shadow.cloud

acl = public-read

bucket_acl = public-readnpm install -g @shadow-drive/clishdwDrive SDK

shdwDrive CLI

shdwDrive SDK is a typeScript SDK for interacting with ShdwDrive, providing simple and efficient methods for file operations on the decentralized storage platform.

📤 File uploads (supports both small and large files)

📥 File deletion

📋 File listing

📊 Bucket usage statistics

ShdwDriveSDKConstructor Options

Methods

uploadFile(bucket: string, file: File, options?: FileUploadOptions)

deleteFile(bucket: string, fileUrl: string)

listFiles(bucket: string)

A command-line interface for interacting with shdwDrive storage.

📤 File uploads (supports both small and large files)

📁 Folder support (create, delete, and manage files in folders)

📥 File and folder deletion

📋 File listing

You can install the CLI globally using npm:

Or use it directly from the repository:

The CLI uses environment variables for configuration:

SHDW_ENDPOINT: The shdwDrive API endpoint (defaults to )

-k, --keypair - Path to your Solana keypair file

-b, --bucket - Your bucket identifier

-f, --file - Path to the file you want to upload

-k, --keypair - Path to your Solana keypair file

-b, --bucket - Your bucket identifier

-f, --file - URL or path of the file to delete

-k, --keypair - Path to your Solana keypair file

-b, --bucket - Your bucket identifier

-n, --name - Name/path of the folder to create

-k, --keypair - Path to your Solana keypair file

-b, --bucket - Your bucket identifier

-p, --path - Path of the folder to delete

Clone the repository:

Install dependencies:

Build the project:

Link the CLI locally:

shdwDrive v1.5 is no longer maintained. Please migrate to v2 and consult the new developer guide for instructions.

pip install shadow-drive

Also for running the examples:

pip install solders

Check out the directory for a demonstration of the functionality.

https://shdw-drive.genesysgo.net/[STORAGE_ACCOUNT_ADDRESS]

This package uses PyO3 to build a wrapper around the official ShdwDrive Rust SDK. For more information, see the .

Section under development.

🔐 Secure message signing

⚡ Progress tracking for uploads

🔄 Multipart upload support for large files

getBucketUsage(bucket: string)createFolder(bucket: string, folderName: string)

deleteFolder(bucket: string, folderUrl: string)

🔐 Secure message signing

🔄 Multipart upload support for large files

-F, --folder - (Optional) Folder path within the bucket# Install from npm

npm install @shdwdrive/sdk

# Or install from repository

git clone https://github.com/GenesysGo/shdwdrive-v2-sdk.git

cd shdwdrive-v2-sdk

npm install

npm run buildcd shdwdrive-v2-sdk

npm link

cd your-project

npm link @shdw-drive/sdkimport ShdwDriveSDK from '@shdwdrive/sdk';

// Initialize with wallet

const drive = new ShdwDriveSDK({}, { wallet: yourWalletAdapter });

// Or initialize with keypair

const drive = new ShdwDriveSDK({}, { keypair: yourKeypair });const file = new File(['Hello World'], 'hello.txt', { type: 'text/plain' });

const uploadResponse = await drive.uploadFile('your-bucket', file, {

onProgress: (progress) => {

console.log(`Upload progress: ${progress.progress}%`);

}

});

console.log('File uploaded:', uploadResponse.finalized_location);const folderResponse = await drive.createFolder('your-bucket', 'folder-name');

console.log('Folder created:', folderResponse.folder_location);const deleteFolderResponse = await drive.deleteFolder('your-bucket', 'folder-url');

console.log('Folder deleted:', deleteFolderResponse.success);const files = await drive.listFiles('your-bucket');

console.log('Files in bucket:', files);const deleteResponse = await drive.deleteFile('your-bucket', 'file-url');

console.log('Delete status:', deleteResponse.success);interface ShdwDriveConfig {

endpoint?: string; // Optional custom endpoint (defaults to https://v2.shdwdrive.com)

}

// Initialize with either wallet or keypair

new ShdwDriveSDK(config, { wallet: WalletAdapter });

new ShdwDriveSDK(config, { keypair: Keypair });npm install -g @shdwdrive/cligit clone https://github.com/genesysgo/shdwdrive-v2-cli.git

cd shdwdrive-v2-cli

npm install

npm run build

npm linkshdw-drive upload \

--keypair ~/.config/solana/id.json \

--bucket your-bucket-identifier \

--file path/to/your/file.txt \

--folder optional/folder/path# Delete a file from root of bucket

shdw-drive delete \

--keypair ~/.config/solana/id.json \

--bucket your-bucket-identifier \

--file filename.txt

# Delete a file from a folder

shdw-drive delete \

--keypair ~/.config/solana/id.json \

--bucket your-bucket-identifier \

--file folder/subfolder/filename.jpgshdw-drive create-folder \

--keypair ~/.config/solana/id.json \

--bucket your-bucket-identifier \

--name my-folder/subfoldershdw-drive list \

--keypair ~/.config/solana/id.json \

--bucket your-bucket-identifiershdw-drive usage \

--keypair ~/.config/solana/id.json \

--bucket your-bucket-identifiergit clone https://github.com/genesysgo/shdwdrive-v2-cli.gitcd shdwdrive-v2-cli

npm installnpm run buildnpm linkfrom shadow_drive import ShadowDriveClient

from solders.keypair import Keypair

import argparse

parser = argparse.ArgumentParser()

parser.add_argument('--keypair', metavar='keypair', type=str, required=True,

help='The keypair file to use (e.g. keypair.json, dev.json)')

args = parser.parse_args()

# Initialize client

client = ShadowDriveClient(args.keypair)

print("Initialized client")

# Create account

size = 2 ** 20

account, tx = client.create_account("full_test", size, use_account=True)

print(f"Created storage account {account}")

# Upload files

files = ["./files/alpha.txt", "./files/not_alpha.txt"]

urls = client.upload_files(files)

print("Uploaded files")

# Add and Reduce Storage

client.add_storage(2**20)

client.reduce_storage(2**20)

# Get file

current_files = client.list_files()

file = client.get_file(current_files[0])

print(f"got file {file}")

# Delete files

client.delete_files(urls)

print("Deleted files")

# Delete account

client.delete_account(account)

print("Closed account")D.A.G.G.E.R. - Launch of - January 16th

D.A.G.G.E.R. - Launch of - September 29, 2023

shdwDrive - Release S3 Compatible Gateway - August 29, 2023

shdwDrive

shdwDrive Rust

shdwDrive

shdwDrive

Apr 5, 2023- shdwDrive Rust

Mar 16, 2023 - shdwDrive

Mar 15, 2023 - shdwDrive Rust

Feb 28, 2023 - shdwDrive Rust

Feb 27, 2023 - shdwDrive CLI

Feb 27, 2023 - shdwDrive

Feb 27, 2023 - shdwDrive

Feb 9, 2023 - shdwDrive

Feb 8, 2023 - shdwDrive

Feb 9, 2023 - shdwDrive CLI

Dec 13, 2022 - shdwDrive CLI

Nov 28, 2022 - shdwDrive

Nov 28, 2022 - shdwDrive

Sep 22, 2022 - shdwDrive CLI

Sep 22, 2022 - shdwDrive CLI

Sep 22, 2022 - shdwDrive CLI

Sep 21, 2022 - shdwDrive CLI

Sep 21, 2022 - shdwDrive

Sep 21, 2022 - shdwDrive

Sep 21, 2022 - shdwDrive CLI

Aug 26, 2022 - Digital Asset RPC Infrastructure

Jul 26, 2022 - shdwDrive

Jul 22, 2022 - shdwDrive

Jul 12, 2022 - shdwDrive

Jul 8, 2022 - shdwDrive

ShdwDrive Developer Tools

shdwDrive v1.5 is no longer maintained. Please migrate to v2 and consult the new developer guide for instructions.

Install the

Follow the

After installing Solana, make sure you have both SHDW and SOL in your wallet in order to reserve storage

, , and are your choices.

Follow the

You can build directly on top of the .

We adhere to a responsible disclosure process for security related issues. To ensure the responsible disclosure and handling of security vulnerabilities, we ask that you follow the process outlined below.

For non-security-related bugs, please submit a new bug report . For security-related reports, please open a "security vulnerability" report .

Please provide a clear and concise description of the issue, steps to reproduce it, and any relevant screenshots or logs.

Important: For security-related issues, please include as much information as possible for reproduction and what its related to. Please be sure to use the report a security vulnerability feature in the repository listed above. If you submit a security vulnerability as a public bug report, we reserve the right to remove the report and move any communications to private channels until a resolution is made.

Security related issues should only be reported through this repository.

While we strongly encourage the use of this repository for bug reports and security issues, you may also reach out to us via our server. Join the #shdw-drive-technical-support channel for assistance. However, please note that we will redirect you to submit the bug report through this GitHub repository for proper handling and tracking.

These are the official social media accounts for GenesysGo and the shdwEcosystem.

A list of terms that relate to all things SHDW!

Asynchronous Byzantine Fault Tolerance (aBFT): aBFT is a consensus algorithm used to provide fault tolerance for distributed systems within asynchronous networks. It is based on the Byzantine Fault Tolerance (BFT) algorithm and guarantees that, when properly configured and given tolerable network conditions, its consensus will eventually be reached — even when faults such as computer crashes, perfect cyber-attacks, or malicious manipulation have happened. aBFT is designed to be tolerant of malicious or faulty actors even when those actors make up more than one-third of the nodes inside the network. Blockchain: A peer to peer, decentralized, immutable digital ledger used to securely and efficiently store data that is permanently linked and secured using cryptography. Byzantine Fault Tolerance: A fault-tolerance system in distributed computing systems where multiple replicas are used to reach consensus, such that any faulty nodes can be tolerated and the consensus of the system can be maintained despite errors or malicious attacks. Consensus Algorithm: A consistency algorithm is a method of achieving agreement on a single data value across distributed systems. It is typically used in blockchain networks to arrive at a common data state and ensure consensus across network participants. It is used to achieve fault-tolerance in distributed systems and allows for the distributed system to remain operational even if some of its components fail. Consensus Protocols: A consensus protocol is an algorithm used to achieve agreement among distributed systems on the state of data within the system. Consensus protocols are essential in distributed systems to ensure there is no disagreement between the nodes on the data state, so that no node has a different view of the data. The protocols typically employ some form of voting, such as majority voting, or methods like proof of work, to achieve the necessary agreement on the data state. Cryptographic Hashing: Cryptographic hashing is a process used to convert data into a fixed-length string of characters, or "hashes", in order to protect the data and verify its authenticity through an encrypted code. The hash cannot be reversed to the original data and is used extensively time to ensure data integrity. Cryptographic hashes can be used to verify data integrity, authenticate data sources, and prevent tampering. Cryptography: The practice and study of techniques used to secure communication, data, and systems by transforming them into an unreadable format. Cryptography is an important component of cybersecurity, providing data protection and confidentiality. Data Caching: Data caching is a software engineering technique that stores frequently accessed or computational data in a cache in order to quickly access that data when it is needed. Data caching works by temporarily storing data to serve it quickly when requested. This can significantly improve the performance of applications, which reduces the amount of time spent serving requests. Data Encryption: The process of encoding data using encryption algorithms in order to keep the content secure and inaccessible to user without a decryption key. Data encryption makes it difficult for unauthorized users to read confidential data. Data Integrity: A quality of digital data that has been maintained over its entire life cycle, and is consistent and correct. The term is ensured through specific protocols such as data validation and error detection and correction. Data Partitioning: The process of splitting large data or throughputs into smaller, more manageable units. This process allows for more accuracy in data processing and faster results, as well as the ability to easily store and access the data. Data Sharding: Data Sharding is a partitioning technique which divides large datasets into smaller subsets which are stored across multiple nodes. It is used to improve scalability, availability and fault tolerance in distributed databases. When combined with replication, Data Sharding can improve the speed of queries by allowing them to run in parallel. Decentralized Storage Network: A Decentralized Storage Network is a type of distributed system which utilizes distributed nodes to store and serve data securely, without relying on a single point of failure. It is an advanced form of data storage and sharing which provides scalability and redundancy, allowing for more secure and reliable access than that offered by conventional centralized systems. Delegated Proof-of-Stake (DPoS): Delegated Proof-of-Stake (DPoS) is a consensus mechanism used in some blockchain networks. It works by allowing token holders to vote for a “delegate” of their choice to validate transactions and produce new blocks on their behalf. Delegates are rewarded for their work with a percentage of all transaction fees and new block rewards. DPoS networks are usually faster and more scalable than traditional PoW and PoS networks. Digital Signatures: A digital signature is a mathematical scheme for demonstrating the authenticity of digital messages of documents. It is used for authenticating the source of messages or documents and for providing the integrity of messages or documents. A valid digital signature gives a recipient reason to believe that the message or document was created or approved by a known sender, and has not been altered in transit. Directed Acyclic Graphs: A Directed Acyclic Graph (DAG) is a type of graph with directed edges that do not form a cycle. It consists of vertices (or nodes) and edges that connect the nodes. The direction of the edges between the nodes determines the flow of information from one node to another. A DAG is a useful structure for modeling data sets, such as queues and trees, to represent complex algorithms and processes in computer engineering. Distributed Computing: A type of computing in which different computing resources, such as memory, hard disk space, and processor time are divided between different computers working as a single system. This gives the benefits of distributed computing like scalability, load balancing, reduced latency, and improved resiliency. It is widely used in data centers and cloud computing. Distributed Consensus: The process of having a distributed system come to agreement on the state of an issue or order of operations. This is achieved through communication, verification and agreement from each of the connected nodes in the system. Distributed Database: A distributed database is a type of database which stores different parts of its data across multiple devices that are connected to computer networks, allowing for more efficient data access by multiple users and more efficient transfer of data between different locations. Distributed File System: A distributed file system is a file system that allows multiple computers to access and share files located on remote servers. It enables a computing system that consists of multiple computers to work together in order to store and manage data. The system can be seen as a large-scale, decentralized file storage platform that spans multiple nodes. Data is replicated across the computers so that if one computer goes down, another can take its place, which helps provide for high availability, scalability, and fault tolerance. Distributed Ledger Technology: A type of database technology that maintains a continuously growing list of records, each stored as a block and protected by cryptography. It is a database shared across multiple nodes in a network, that keeps track of digital transactions between the nodes and is protected by airtight security and tamper proof mechanisms. Transaction records are constantly updated and can be easily accessed. Distributed Ledger Technology Platforms: A distributed ledger technology (DLT) platform is a secure digital platform that allows data to be securely shared and stored in a decentralized manner across a wide range of nodes within a network. DLT platforms provide a distributed ledger solution that is tamper-resistant, secured using cryptographic encryption protocols, and can remain resilient even in the face of malicious actors. DLT platforms also enable secure and timely data exchanges, providing scalability, reliability and transparency for transactions. Distributed Storage Services: Services that allow users to store data across multiple physical storage locations. This increases reliability and availability of the data and allows for distributed workloads to take advantage of this. Distributed Storage System: A distributed storage system is a series of computer systems which interact together to manage, store, and back up massive amounts of data in a reliable and secure way. A distributed storage system is more fault-tolerant than conventional storage systems because its components are not vulnerable to a single point of failure. This allows a distributed storage system to provide higher levels of data durability and availability than a single, centralized system. Distributed Systems: A type of computing system consisting of multiple, independent components that can communicate with each other to coordinate and synchronize their actions, creating a unified system as a whole. It is a type of software architecture that is designed to maximize resources across multiple computers connected over a network. Distributed Systems Architecture: A distributed system is an interconnection of multiple independent computers that coordinate their activities and share the resources of a network, usually communicating via a message-passing interface or remote procedure calls. Distributed systems architecture includes design guidelines and approaches to ensure a system’s resilience, performance, scalability, availability, and security. Erasure Coded File Storage: Erasure coded file storage is an archive strategy that divides data into portions, and then encodes each portion multiple times using various error-correction algorithms. This redundancy makes erasure coding valuable for permanently storing critical data as it increases reliability but reduces storage costs. Fault Tolerance: Fault tolerance is the capability of a system to continue its normal operation despite the presence of hardware or software errors, disruptions, and even loss of components or data. Fault tolerant systems are designed to be resilient to failure and to continue normal operation in the event of a partial or total system failure. Gossip Protocol: A distributed algorithm in which each member of a distributed system periodically communicates a message to one or more nodes, which then send the same message onto other nodes, until all members of the network receive the same message. This allows for a system to remain aware of all other nodes and records in the system without the need for a central server. Hashgraph: A distributed ledger platform that uses a virtual voting algorithm to achieve consensus faster than a traditional distributed ledger. This system allows for the secure transfer of digital objects and data without needing a third party for authentication. Its consensus algorithm is based on a gossip protocol, where user nodes are able to share news and updates with each other. Hyperledger Fabric: Hyperledger Fabric is an open source software project that provides a foundation for developing applications that use distributed ledgers. It allows for secure and permissioned transactions between multiple businesses, enabling an ecosystem of participants to securely exchange data and assets. It provides support for smart contracts, digital asset management, encryption, and identity services. Immutability: The capacity of an object to remain unchanged over time, even after multiple operations and modifications. Additionally, immutability is a property by which the object remains unchanged, and all operations on that object return a new instance instead of modifying the original. Interoperability: The ability of systems or components to work together and exchange data, even though they may be from different manufacturers and have different technical specifications. Key Management: Key management is the overall management of a set of cryptographic keys used to protect data both in transit over a network and in storage. Proper key management encryption and control ensure the secure communication within applications and systems and provides a baseline for protecting sensitive information. Key managers are responsible for the storage, rotation, and renewal of encryption keys and must ensure that the data is secure against unauthorized disclosure, modification, inclusion, or loss. Merkle Tree: A Merkle tree is a tree-based data structure used for verifying the integrity of large sets of data. It consists of a parent-child relationship of data nodes, with each block in the tree taking the data of all child blocks, hashing it together, and then creating a hash of its own. This process is repeated until a single block remains, which creates a hierarchical and hashed structure. Multi-Node Clusters: A type of computing architecture, composed of multiple connected computers, or nodes, that work together and are able to act as a single system. A multi-node cluster allows for increased information processing and storage capacity, as well as increased reliability and availability. Nodes: A node is a basic unit of a data structure, such as a linked list or tree data structure. Nodes contain data and also may link to other nodes. Nodes are used to implement graphs, linked lists, trees, stacks, and queues. They are also used to represent algorithms and data structures in computer science. Oracles: An oracle is a system designed to provide users with a result of a query. These systems are often relied on to deliver accurate and reliable conclusions to users, based on the data they have provided. There have been several generations of oracles, ranging from hardware to software-based systems, each of which has different sets of capabilities. Orchestration: Orchestration is the process of using software automation to manage and configure cloud-based computing services. It is used to automate the management and deployment of workloads across multiple cloud platforms, allowing organizations to gain efficiency and scalability. Peer-to-Peer Networking: Peer-to-peer networking is a type of network architecture model where computers (or peer nodes) are connected together in such a way that each node can act as a client or a server for the other nodes in the network. In other words, it does not rely on a central server to manage the communication between the connected peers in the network. Proof of Stake (PoS): A consensus mechanism in blockchain technology which allows nodes to validate transactions and produce new blocks according to the amount of coins each node holds. It is an alternative to the Proof of Work (PoW) consensus protocol. In PoS, validators stake their coins, meaning they have to deposit coins with the blockchain protocol before they can validate blocks. Validators receive rewards for creating blocks and are penalized for malicious behavior. Proof of Storage (PoSt): Proof of Storage is a consensus cryptographic mechanism which is used to attest to the storage of data in a distributed storage network. The consensus model requires a randomly chosen subset of storage miners to periodically provide cryptographic proofs that they are storing data correctly and that all necessary nodes are online. The goal of PoSt is to ensure that data stored is secure, as well as increase the security and transparency of distributed storage networks. Proof of Work (PoW): A consensus algorithm by which a set of data called a “block” is validated by solving computationally-taxing mathematical problems. It is used as a security measure in blockchains as each block that is created must have a valid PoW for the block to be accepted. The difficultly of the computational problems get more difficult with time as the blockchain grows, incentivizing the participants to continue to keep the chain running. Proof-of-Stake (PoS): A consensus mechanism used in certain distributed ledger system, where validator nodes participate in block validation with a combination of network participation and staking of token or other resources. The Proof-of-Stake consensus is an alternative to Proof-of-Work (PoW) used in many other blockchain networks. Proof-of-Work: A concept used by blockchain networks to ensure consensus by requiring a certain amount of effort or work to make sure the blocks of data in the chain are legitimate and valid. This is done by large amounts of computational work, typically hashing algorithms. Public Distributed Ledger: A public distributed ledger is a decentralized database that holds information about all the transactions that have taken place across the network, distributed and maintained by all participants without the need for a central authority to manage and validate it. All participants in the network can access, view, and validate the data stored on the ledger. Public Key Infrastructure (PKI): A set of protocols, services, and standards that manage, distribute, identify and authenticate public encryption keys associated with users, computers, and organizations in a network. PKI allows secure communication and ensures that only the intended recipient can read the message. QuickP2P: A type of networking protocol focused on peer-to-peer (P2P) computing that allows users to exchange files and data quickly across multiple computers. QuickP2P works by breaking the files into small blocks, which can then be rapidly downloaded separately in parallel by the searching user. Quorum: The minimum number of nodes in a distributed system that must be engaged in order to reach a consensus. Quorum value is set based on the number of nodes in the system and must be greater than half of the total nodes present in the system. Replication: The process of creating redundant copies of data or objects in distributed systems for the purpose of fault-tolerant data storage or network operations. Routing Protocols: Protocols that direct traffic between computers across a network or the Internet, determining the routing path for data packets and exchanging information about available routes between routers. Routing protocols define the way routers communicate with each other to exchange network information and configure the best path for data traffic. Scalability: The ability of a system to increase its performance or capacity when given additional resources such as additional computing, memory, data storage, network bandwidth and power. Scalability is an important consideration for developing systems that must handle increasing amounts of data, workload or users. Secure Messaging: Secure messaging is the process of sending or receiving encrypted messages between two or more parties, with the intent of ensuring that only the intended recipient can access the contents of the message. The encryption process generally involves the use of public and private keys, making it secure and nearly impossible for anyone else to intercept any messages sent over the network. Secure Multi-Party Computation (MPC): A computer security framework that allows several parties to jointly compute a function over their private inputs without revealing anything other than their respective outputs to the other parties. MPC leverages the security of cryptography in order to achieve privacy and security in computation. Security Protocols: Security protocols are systems of standard rules and regulations created by computer networks to protect data and enable secure communication between devices. They are commonly used for authentication, encryption, confidentiality and data integrity. Smart Contracts: A type of protocol that is self-executing, autonomously carrying out the terms of an agreement between two or more parties when predetermined conditions are met. They are used to exchange money, assets, shares, or anything of value without the need for a third party or intermediary in a secure and trustless manner. Smart contracts are used widely within blockchain-based applications to reduce risk and increase speed and accuracy. Smart Contracts: Contracts written in computer code that are stored and executed on a blockchain network. Smart contracts are self-executing and contain the terms of an agreement between two or more parties that are written directly into the code. Smart contracts are irreversible and are enforced without the need for a third-party. State Machines: A state machine is a system composed of transitioning states, where each state is determined by the previous state and the current inputs. Each state has attached conditions and outputs, and when a the previous state and input conditions match the conditions of the current state, a single output will be generated. State machines are commonly used in computer engineering for finite automation. Synchronization Methods: Methods of ensuring that different parts of a distributed application or system are working from a shared same set of data at a given point in time. This can be achieved by actively sending data among components or by passively waiting for components to ask for data before sending it. Synchronous Byzantine Fault Tolerance (SBFT): A consensus algorithm for blockchain networks that requires each node to be connected and active for all network messages to be exchanged and validated in a given time period. This algorithm provides a way for a distributed network to come to consensus, even when some participants may be compromised or malicious. Time-Stamping: The technique of assigning a unique and precise time value to all events stored in a record in order to define an chronological order of those events. Virtual Voting: Digital voting system where citizens are able to cast their vote over the internet, directly or through a voting portal. It has been used as an alternative to physical voting polls, particularly during the pandemic. It can also be used to verify the accuracy and security of elections. Web 3.0: The Web 3.0 concept refers to the newest generation of the internet. It is highly decentralized and automated, based on AI and distributed ledger technologies such as blockchain. It allows for a more open and secure infrastructure for data storage, authentication, and interactions between devices across the web. It has the potential to be much smarter, faster, and more efficient than its predecessor Web 2.0.\

CLI

Get started within minutes

API

Instant interaction

SDKs

Advanced applications

Official SHDW and GenesysGo podcast appearances and articles

Direct Download (APK) | Solana App Store Link (coming soon) | Play Store Link (coming soon)

As a User:

the shdwDrive mobile app

Connect your Solana wallet

Choose a storage plan (start with 5GB free!)

Create your first bucket

As an Operator:

As of release 1.0.8, gossip tickets have been refreshed. All current operators must verify their ticket has correctly updated after upgrade/install.

the shdwDrive mobile app

properly

Navigate to the Operator tab

Select your storage level

As a Developer:

- integrate shdwDrive in your app

Need specific information? Jump to:

- New to shdwDrive? Start here

- Learn how to participate in the network

- integrate shdwDrive with your app

- Dive into the network architecture

Have questions? Our comprehensive sections below cover everything you need to know about using and operating on shdwDrive v2. Have feedback? Let us know .

Acquire a valid Join Ticket

Connect your shdwNode!

Economics - Dive deeper into how the protocol works

Requirement settings for shdwOperators

(S21 & Newer)

(6 & Newer)

(10 & Newer)

Go to Settings → Battery (or Battery saver / Battery optimization).

Locate shdwDrive in the list.

Set it to Not optimized or Unrestricted.

This ensures the system does not close the app in the background.

Settings → Apps → shdwDrive → Battery (or similar).

Toggle Allow background activity to On.

Disable any “Battery optimization” or “Restrict background data” specifically for shdwDrive.

Never manually force-stop the shdwDrive app.

Keep shdwDrive in your Recent Apps (avoid swiping it away).

If there’s an Auto-start / Auto-launch feature, turn it on for shdwDrive.

Allow it to run in the background continuously.

Exclude shdwDrive from any memory cleaners or “one-tap boost” apps.

Turn off automatic hibernation for the app.

Confirm shdwDrive is on any “Do not optimize” or “White list” for battery/memory.

If possible, keep your device plugged in while the node runs.

Avoid or disable aggressive power-saving modes that close apps at low battery.

Set your low-battery threshold to around 15% so shdwDrive won’t be shut down prematurely.

A dedicated power supply can help if you’re running the node for extended periods.

Disable Adaptive Battery specifically for shdwDrive.

Turn off “Optimize for battery life” for shdwDrive.

Remove it from any “sleeping apps” or “deep-sleep” lists.

If your device has Developer Options:

Set Background process limit to Standard or No limit.

Disable or reduce any “memory optimization” tools that might kill background processes.

Use High performance mode if available for stable connectivity.

Reduce or disable thermal throttling on gaming/performance phones if comfortable.

Ensure the device has adequate cooling to avoid forced closures.

Below are recommendations for devices running Android 12L or later. Menu names may vary by region or device.

Applies to: Galaxy S21, S22, S23, A53, A54, etc. (on Android 12L+)

Battery Settings

Settings → Battery → Background usage limits

Add shdwDrive to Unrestricted apps.

Applies to: Pixel 6, 6 Pro, 7, 7 Pro, 8, etc. (Android 12L+)

Battery Settings

Settings → Battery → Battery Saver

Turn it off or only use it manually.

Applies to: OnePlus 10, 10T, 11, etc. (Android 12L+)

Battery Optimization

Settings → Battery → Advanced → Battery optimization → shdwDrive → Don’t optimize.

Background Process

Applies to: Xiaomi / Redmi / POCO (2022+ devices running 12L+)

Battery & Performance

Settings → Battery & performance

Turn off Battery saver for shdwDrive or set to Unrestricted.

Applies to: OPPO/Realme devices running Android 12L+

Battery Settings

Settings → Battery → select High performance mode or exclude from Power saver.

App Management

Applies to: ZenFone 8/9, ROG Phone 5/6/7 on 12L+

Power Management

Settings → Battery → PowerMaster

Disable optimization for shdwDrive.

Auto-start manager → Allow shdwDrive.

Applies to: 2022+ models running Android 12L or later

Battery Settings

Settings → Battery → Turn off Adaptive Battery for shdwDrive or pick Unrestricted.

App Management

Applies to: Nothing Phone (1) & (2)

Battery Settings

Settings → Battery → Battery optimization → shdwDrive → Don’t optimize.

App Management

Applies to: Xperia devices launched or updated to 12L+

Battery Settings

Settings → Battery → turn off Adaptive Battery for shdwDrive.

Disable or bypass STAMINA mode if it kills the node.

Applies to: Vivo (OriginOS/Funtouch OS 12L+)

Battery Settings

Settings → Battery → Background power consumption → Allow for shdwDrive.

Exclude from power saving mode.

iManager Settings

Applies to: Nokia models from 2022 onward running 12L+

Power Settings

Settings → Battery → Battery optimization → Don’t optimize for shdwDrive.

Disable Adaptive Battery for the app if possible.

Applies to: TCL devices on Android 12L+ (2022 releases and newer)

Battery Management

Settings → Battery & Performance → App power saving mode → Off for shdwDrive

Smart Manager → auto-launch → Enable for shdwDrive

App Settings

Applies to: ZTE devices on Android 12L+ (e.g., Axon series)

Power Settings

Settings → Battery → power saving mode → exclude shdwDrive

App power saving → Off for shdwDrive

App Management

Applies to: Recent Lenovo tablets / phones with 12L or later

Battery Settings

Settings → Battery → Allow background app management for shdwDrive

Exclude from power saving modes.

Security Settings

Applies to: Black Shark 5 Series and later (Android 12L+)

Game Dock Settings

Game Dock → Performance → CPU/GPU → Performance mode

Background process → Allow shdwDrive

Battery Settings

Applies to: Infinix/Tecno models running 12L+

Power Management

Settings → Battery → Power Management → Off for shdwDrive

Background apps → Allow

Phone Master

Check Settings After Updates: System updates can revert your battery or background settings. Review them after each update.

Stay Plugged In: A stable power source ensures you won’t lose node connectivity when battery runs low.

Monitor Node Activity: If the node goes offline, revisit settings to confirm none have changed.

By following these instructions on your Android 12L+ device, you’ll help keep shdwDrive running reliably in the background for maximum node uptime. Good luck and happy node-running!

Xiaomi / POCO (Newer Models)

OPPO / Realme (Android 12L+)

ASUS (ZenFone / ROG on 12L+)

Motorola (2022+ Models)

Nothing Phone (1, 2)

Sony Xperia (Recent Models)

Vivo (Android 12L+)

Nokia (2022+ Models)

TCL (12L+ Releases)

ZTE (12L+ Releases)

Lenovo (Recent Tablets / Phones)

Black Shark (5 Series & Newer)

Infinix / Tecno (Current 12L+ Models)

App Management

Settings → Apps → shdwDrive → Battery → Unrestricted

Under Mobile data, enable background data.

Keep shdwDrive in memory if such an option is present.

Device Care

Settings → Device care → Battery → Turn off Adaptive power saving

Choose High performance mode if needed.

Background Process

Settings → Apps → Special app access → Background restrictions

Make sure shdwDrive is allowed in the background.

Memory Management

Settings → Developer options → Background process limit → Standard.

Settings → Apps → shdwDrive → Battery → Don’t optimize

Allow background data usage.

System Settings

Settings → System settings → RAM boost → Disable if it kills background tasks.

Battery → Intelligent Control → Turn off for shdwDrive.

Settings → Apps → Manage apps → shdwDrive

Autostart → Enable

Battery saver → No restrictions

Security App (If applicable)

In Security → Battery optimization → Disable for shdwDrive.

Exclude from memory optimization as well.

Battery → Allow background activity

Startup/Auto-launch → Enable

System Settings

Settings → Additional Settings → Background app management → Allow shdwDrive.

Mobile Manager

Mobile Manager → PowerMaster → High performance

Keep shdwDrive unrestricted in background.

App Specific

Settings → Apps → shdwDrive → allow auto-start and background activity.

Mobile data & Wi-Fi → Allow background data.

Performance

Settings → System → (Developer options or Gestures) → ensure no forced app closures.

Battery usage → Unrestricted

Background process → Allow

System Settings

Settings → System → Developer options → set Background process limit to Standard or no limit.

Settings → Apps → shdwDrive → Advanced → Battery optimization → Don’t optimize.

Allow background data usage.

Power Management

Check that Adaptive battery is off or not affecting shdwDrive.

Let the app run freely in background settings.

iManager → App Manager → Auto-start → Enable for shdwDrive.

Background running → Allow.

App Management

Settings → Apps → shdwDrive → Background wake up → Allow

Background running permission → Allow

Settings → Apps & notifications → shdwDrive → Advanced → Background restrictions → Off

Battery → Unrestricted

Background Activity

Settings → System → Developer options → Background process limit → Standard

Settings → Apps → shdwDrive → Battery → Don’t restrict

Background process → Allow

System Optimization

Settings → Privacy → Smart Manager → Battery optimization → Disable for shdwDrive

Settings → Apps → shdwDrive → Battery → Unrestricted

Auto-start → Enable

System Settings

Settings → Power Manager → Battery optimization → Don’t optimize for shdwDrive

Security Center → App management → Auto-start → enable for shdwDrive

Background apps → allow

App Specific

Settings → Apps → shdwDrive → Battery → Don’t optimize

Background mobile data → Allow

Settings → Battery → App battery saver → disable for shdwDrive

Performance mode → On when plugged in

System Settings

Settings → Additional settings → Developer options → Background process → Standard

Phone Master → Auto-start → Enable shdwDrive

Background running → Allow

App Management

Settings → Apps → shdwDrive → Power usage → Don’t restrict

Auto-launch → Enable

shdwDrive v1.5 is no longer maintained. Please migrate to v2 and consult the new developer guide for instructions.

Verify that the ShdwDrive network is up and running. https://status.genesysgo.net/

Check the ShdwDrive Change Log for any known issues or bugs that may be causing the problem. https://docs.shadow.cloud/reference/change-logs

Contact ShdwDrive support for further assistance. https://discord.gg/genesysgo

There's ongoing development to increase the maximum file size.

Check all of your versions and dependencies. You Solana wallet adapter dependencies and the version of the JavaScript SDK must be up to date.

Double check the wallet you have chosen to work with is not having issues. You may need to reach out to them directly.

Please provide a clear and concise description of the issue, steps to reproduce it, and any relevant screenshots or logs.

Label your issue as a 'bug' or 'security' accordingly.

Important: For security-related issues, do not include sensitive information in the issue description. Instead, submit a pull request to our repository, containing the necessary details, so that the information remains concealed until the issue is resolved.

Security related issues should only be reported through this repository.

shdwDrive v1.5 is no longer maintained. Please migrate to v2 and consult the new developer guide for instructions.

POST https://shadow-storage.genesysgo.net

Creates a new storage account

Request content type: application/json

POST https://shadow-storage.genesysgo.net

Gets on-chain and ShdwDrive Network data about a storage account

Request content type: application/json

POST https://shadow-storage.genesysgo.net

Uploads a single file or multiple files at once Request content type: multipart/form-data

Parameters (FormData fields)

POST https://shadow-storage.genesysgo.net

Edits an existing file

Request content type: multipart/form-data

Parameters (FormData fields)

POST https://shadow-storage.genesysgo.net

Get a list of all files associated with a storage account

Request content type: application/json

POST https://shadow-storage.genesysgo.net

Get a list of all files and their size associated with a storage account

Request content type: application/json

POST https://shadow-storage.genesysgo.net

Get information about an object

Request content type: application/json

POST https://shadow-storage.genesysgo.net

Deletes a file from a given Storage Account

Request content type: application/json

POST https://shadow-storage.genesysgo.net

Adds storage

Request content type: application/json

POST https://shadow-storage.genesysgo.net

Reduces storage

Request content type: application/json

POST https://shadow-storage.genesysgo.net

Makes file immutable

Request content type: application/json

This example demonstrates how to securely upload files to the ShdwDrive using the provided API. It includes the process of hashing file names, creating a signed message, and sending the files along with the necessary information to the ShdwDrive endpoint.

In this example, we demonstrate how to edit a file in ShdwDrive using the API and message signature verification. The code imports necessary libraries, constructs a message to be signed, encodes and signs the message, and sends an API request to edit the file on ShdwDrive.

In this example, we demonstrate how to delete a file from the ShdwDrive using a signed message and the ShdwDrive API. The code first constructs a message containing the storage account and the file URL to be deleted. It then encodes and signs the message using the tweetnacl library. The signed message is then converted to a bs58-encoded string. Finally, a POST request is sent to the ShdwDrive API endpoint to delete the file.

url*

String

Url of the original file you want to edit. Example:

https://shdw-drive.genesysgo.net/<storage-account>/<file-name>

transaction*

Serialized create storage account transaction that's partially signed by the storage account owner

storage_account*

String

Publickey of the storage account you want to get information for

file*

The file you want to upload. You may add up to 5 files each with a field name of

file

.

message*

String

Base58 message signature.

signer*

String

Publickey of the signer of the message signature and owner of the storage account

storage_account*

String

Key of the storage account you want to upload to

file*

String

The file you want to upload. You may add up to 5 files each with a field name of

file

.

message*

String

Base58 message signature.

signer*

String

Publickey of the signer of the message signature and owner of the storage account

storage_account*

String

Key of the storage account you want to upload to

storageAccount

String

String version of the storage account PublicKey that you want to get a list of files for

storageAccount*

String

String version of the storage account PublicKey that you want to get a list of files for

location*

String

URL of the file you want to get information for

message

String

Base58 message signature.

signer

String

Publickey of the signer of the message signature and owner of the storage account

location

String

URL of the file you want to delete

transaction *

String

Serialized add storage transaction that is partially signed by the ShdwDrive network

transaction *

String

Serialized reduce storage transaction that is partially signed by the ShdwDrive network

transaction

String

Serialized make immutable transaction that is partially signed by the ShdwDrive network

{

"shdw_bucket": String,

"transaction_signature": String

}{

storage_account: PublicKey,

reserved_bytes: Number,

current_usage: Number,

immutable: Boolean,

to_be_deleted: Boolean,

delete_request_epoch: Number,

owner1: PublicKey,

owner2: PublicKey,

accountCoutnerSeed: Number,

creation_time: Number,

creation_epoch: Number,

last_fee_epoch: Number,

identifier: String

version: "V1"

}{

storage_account: PublicKey,

reserved_bytes: Number,

current_usage: Number,

immutable: Boolean,

to_be_deleted: Boolean,

delete_request_epoch: Number,

owner1: PublicKey,

accountCoutnerSeed: Number,

creation_time: Number,

creation_epoch: Number,

last_fee_epoch: Number,

identifier: String,

version: "V2"

}json{

"finalized_locations": [String],

"message": String

"upload_errors": [{file: String, storage_account: String, error: String}] or [] if no errors

}{

"finalized_location": String,

"error": String or not provided if no error

}{

"keys": [String]

}{

"files": [{"file_name": String, size: Number}]

}JSON object of the file's metadata in the ShdwDrive Network or an error{

"message": String,

"error": String or not passed if no error

}{

message: String,

transaction_signature: String,

error: String or not provided if no error

}{

message: String,

transaction_signature: String,

error: String or not provided if no error

}{

message: String,

transaction_signature: String,

error: String or not provided if no error

}import bs58 from 'bs58'

import nacl from 'tweetnacl'

import crypto from 'crypto'

// `files` is an array of each file passed in.

const allFileNames = files.map(file => file.fileName)

const hashSum = crypto.createHash("sha256")

// `allFileNames.toString()` creates a comma-separated list of all the file names.

const hashedFileNames = hashSum.update(allFileNames.toString())

const fileNamesHashed = hashSum.digest("hex")

// `storageAccount` is the string representation of a storage account pubkey

let msg = `Shadow Drive Signed Message:\nStorage Account: ${storageAccount}\nUpload files with hash: ${fileNamesHashed}`;

const fd = new FormData();

// `files` is an array of each file passed in

for (let j = 0; j < files.length; j++) {

fd.append("file", files[j].data, {

contentType: files[j].contentType as string,

filename: files[j].fileName,

});

}

// Expect the final message string to look something like this if you were to output it

// ShdwDrive Signed Message:

// Storage Acount: ABC123

// Upload files with hash: hash1

// If the message is not formatted like above exactly, it will fail message signature verification

// on the ShdwDrive Network side.

const encodedMessage = new TextEncoder().encode(message);

// Uses https://github.com/dchest/tweetnacl-js to sign the message. If it's not signed in the same manor,

// the message will fail signature verification on the ShdwNetwork side.

// This will return a base58 byte array of the signature.

const signedMessage = nacl.sign.detached(encodedMessage, keypair.secretKey);

// Convert the byte array to a bs58-encoded string

const signature = bs58.encode(signedMessage)

fd.append("message", signature);

fd.append("signer", keypair.publicKey.toString());

fd.append("storage_account", storageAccount.toString());

fd.append("fileNames", allFileNames.toString());

const request = await fetch(`${SHDW_DRIVE_ENDPOINT}/upload`, {

method: "POST",

body: fd,

});import bs58 from 'bs58'

import nacl from 'tweetnacl'

// `storageAccount` is the string representation of a storage account pubkey

// `fileName` is the name of the file to be edited

// `sha256Hash` is the sha256 hash of the new file's contents

const message = `ShdwDrive Signed Message:\n StorageAccount: ${storageAccount}\nFile to edit: ${fileName}\nNew file hash: ${sha256Hash}`

// Expect the final message string to look something like this if you were to output it

// ShdwDrive Signed Message:

// Storage Acount: ABC123

// File to delete: https://shadow-drive.genesysgo.net/ABC123/file.png

// If the message is not formatted like above exactly, it will fail message signature verification

// on the ShdwDrive Network side.

const encodedMessage = new TextEncoder().encode(message);

// Uses https://github.com/dchest/tweetnacl-js to sign the message. If it's not signed in the same manor,

// the message will fail signature verification on the Shdw Network side.

// This will return a base58 byte array of the signature.

const signedMessage = nacl.sign.detached(encodedMessage, keypair.secretKey);

// Convert the byte array to a bs58-encoded string

const signature = bs58.encode(signedMessage)

const fd = new FormData();

fd.append("file", fileData, {

contentType: fileContentType as string,

filename: fileName,

});

fd.append("signer", keypair.publicKey.toString())

fd.append("message", signature)

fd.append("storage_account", storageAccount)

const uploadResponse = await fetch(`${SHDW_DRIVE_ENDPOINT}/edit`, {

method: "POST",

body: fd,

});import bs58 from 'bs58'

import nacl from 'tweetnacl'

// `storageAccount` is the string representation of a storage account pubkey

// `url` is the link to the ShdwDrive file, just like the previous implementation needed the url input

const message = `ShdwDrive Signed Message:\nStorageAccount: ${storageAccount}\nFile to delete: ${url}`

// Expect the final message string to look something like this if you were to output it

// ShdwDrive Signed Message:

// Storage Acount: ABC123

// File to delete: https://shadow-drive.genesysgo.net/ABC123/file.png

// If the message is not formatted like above exactly, it will fail message signature verification

// on the ShdwDrive Network side.

const encodedMessage = new TextEncoder().encode(message);

// Uses https://github.com/dchest/tweetnacl-js to sign the message. If it's not signed in the same manor,

// the message will fail signature verification on the Shdw Network side.

// This will return a base58 byte array of the signature.

const signedMessage = nacl.sign.detached(encodedMessage, keypair.secretKey);

// Convert the byte array to a bs58-encoded string

const signature = bs58.encode(signedMessage)

const deleteRequestBody = {

signer: keypair.publicKey.toString(),

message: signature,

location: options.url

}

const deleteRequest = await fetch(`${SHDW_DRIVE_ENDPOINT}/delete-file`, {

method: "POST",

headers: {

"Content-Type": "application/json"

},

body: JSON.stringify(deleteRequestBody)

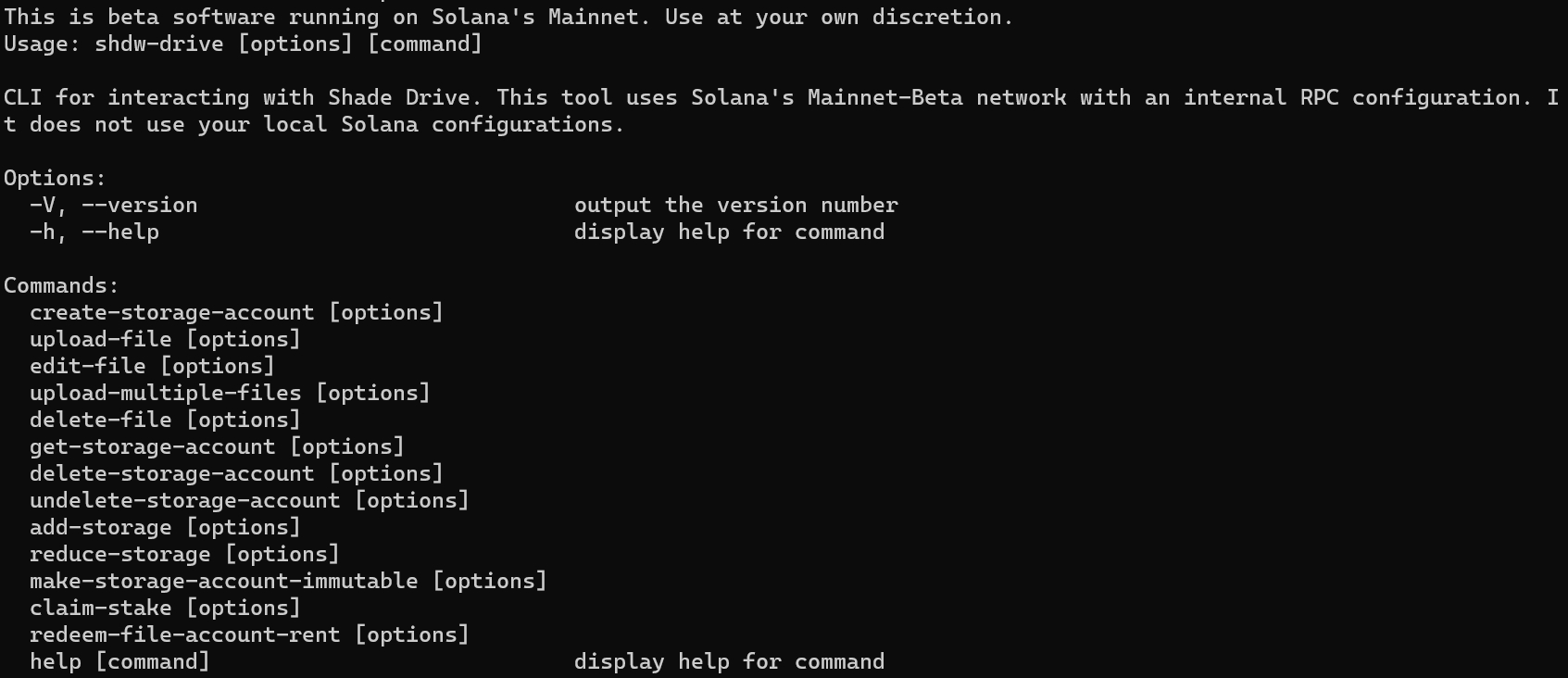

})The CLI is the easiest way to interact with shdwDrive. You can use your favorite shell scripting language, or just type the commands one at a time. For test driving shdwDrive, this is the best way to get started.

Prerequisites: Install NodeJS LTS 16.17.1 on any OS.

Then run the following command

In order to interact with shdwDrive, we're going to need a Solana wallet and CLI to interact with the Solana blockchain.

NOTE: The shdwDrive CLI uses it's own RPC configuration. It does not use your Solana environment configuration.

Check HERE for the latest version.

Upon install, follow that up immediately with:

We need to have a keypair in .json format to use the shdwDrive CLI. This is going to be the wallet that owns the storage account. If you want, you can convert your browser wallet into a .json file by exporting the private keys. Solflare by default exports it in a .json format (it looks like a standard array of integers, [1,2,3,4...]. Phantom, however, needs some help and we have just the tool to do that.

If you want to create a new wallet, just use

You will see it write a new keypair file and it was display the pubkey which is your wallet address.

You'll need to send a small amount of SOL and SHDW to that wallet address to proceed! The SOL is used to pay for transaction fees, the SHDW is used to create (and expand) the storage account!

shdwDrive CLI comes with integrated help. All shdwDrive commands begin with shdw-drive.

The above command will yield the following output

You can get further help on each of these commands by typing the full command, followed by the --help option.

This is one of the few commands where you will need SHDW. Before the command executes, it will prompt you as to how much SHDW will be required to reserve the storage account. There are three required options:

-kp, --keypair

Path to wallet that will create the storage account

-n, --name

What you want your storage account to be named. (Does not have to be unique)

-s, --size

Amount of storage you are requesting to create. This should be in a string like '1KB', '1MB', '1GB'. Only KB, MB, and GB storage delineations are supported.

Example:

Options for this command:

-kp, --keypair

Path to wallet that will upload the file

-f, --file

File path. Current file size limit is 1GB through the CLI.

If you have multiple storage accounts it will present you with a list of owned storage accounts to choose from. You can optionally provide your storage account address with:

-s, --storage-account

Storage account to upload file to.

--rpc <your-RPC-endpoint>

RPC endpoint to pass custom endpoint. This can resolve 410 errors if you are using methods not available from the default free public endpoint.

Example 1:

Example 2 with RPC:

A more realistic use case is to upload an entire directory of, say, NFT images and metadata. It's basically the same thing, except we point the command to a directory.

Options:

-kp, --keypair

Path to wallet that will upload the files

-d, --directory

Path to folder of files you want to upload.

-s, --storage-account

Storage account to upload file to.

-c, --concurrent

Number of concurrent batch uploads. (default: "3")

--rpc <your-RPC-endpoint>

RPC endpoint to pass custom endpoint. This can resolve 410 errors if you are using methods not available from the default free public endpoint.

Example 1:

Example 2 with RPC:

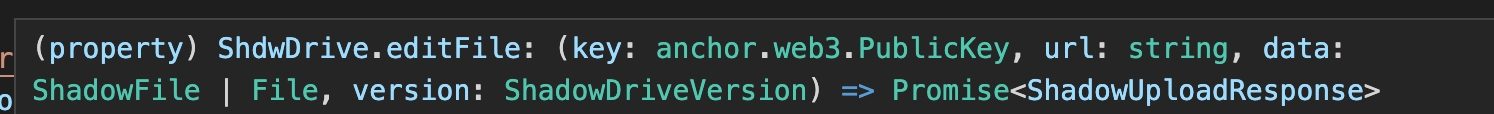

This command is used to replace an existing file that has the exact same name. If you attempt to upload this file using edit-file and an existing file with the same name is not already there, the request will fail.

There are three requirements for this command:

-kp, --keypair

Path to wallet that will upload the file

-f, --file

File path. Current file size limit is 1GB through the CLI. File must be named the same as the one you originally uploaded

-u, --url

ShdwDrive URL of the file you are requesting to delete

Example:

This is straightforward and it's important to note once it's deleted, it's gone for good.

There are two requirements and there aren't any options outside of the standard ones:

-kp, --keypair

Path to the keypair file for the wallet that owns the storage account and file

-u, --url

ShdwDrive URL of the file you are requesting to delete

Example:

You can expand the storage size of a storage account. This command consumes SHDW tokens.

There are only two requirements for this call

-kp, --keypair

Path to wallet that will upload the files

-s, --size

Amount of storage you are requesting to add to your storage account. Should be in a string like '1KB', '1MB', '1GB'. Only KB, MB, and GB storage delineations are supported currently

If you have more than one account, you'll get to pick which storage account you want to add storage to.

Example:

You can reduce your storage account and reclaim your unused SHDW tokens. This is a two part operation where you first reduce your account size, and then request your SHDW tokens. First, let's reduce the storage account size.

There are two requirements

-kp, --keypair

Path to wallet that will upload the files

-s, --size

Amount of storage you are requesting to remove from your storage account. Should be in a string like '1KB', '1MB', '1GB'. Only KB, MB, and GB storage delineations are supported currently

Example:

Since you reduced the amount of storage being used in the previous step, you are now free to claim your unused SHDW tokens. The only requirement here is a keypair.

Example:**

You can entirely remove a storage account from ShdwDrive. Upon completion, your SHDW tokens will be returned to the wallet.

NOTE: You have a grace period upon deletion that lasts until the end of the current Solana epoch. Go HERE to see how much time is remaining in the current Solana epoch to know how much grace period you will get.

All you need here is a keypair, and it will prompt you for the specific storage account to delete.

Example:

Assuming the epoch is still active, you can undelete your storage account. You only need a keypair. You will be prompted to select a storage account when running the command. This removes the deletion request.

One of the most unique and useful features of ShdwDrive is that you can make your storage truly permanent. With immutable storage, no file that was uploaded to the account can ever be deleted or edited. They are solidified and permanent, as is the storage account itself. You can still continue to upload files to an immutable account, as well as add storage to an immutable account.

The only requirement is a keypair. You will be prompted to select a storage account when running the command.

Example:

Create an account on which to store data. Storage accounts can be globally, irreversibly marked immutable for a one-time fee. Otherwise, files can be added or deleted from them, and space rented indefinitely.

Parameters:

--name

String

--size

Byte

Example:

Queues a storage account for deletion. While the request is still enqueued and not yet carried out, a cancellation can be made (see cancel-delete-storage-account subcommand).

Parameters:

--storage-account

Pubkey

Example:

Example:

Cancels the deletion of a storage account enqueued for deletion.

Parameters:

--storage-account

Pubkey

Example:

Example:

Redeem tokens afforded to a storage account after reducing storage capacity.

Parameters:

--storage-account

Pubkey

Example:

Example:

Increase the capacity of a storage account.

Parameters:

--storage-account

Pubkey

--size

Byte (accepts KB, MB, GB)

Example:

Example:

Increase the immutable storage capacity of a storage account.

Parameters:

--storage-account

Pubkey

--size

Byte (accepts KB, MB, GB)

Example:

Example:

Reduce the capacity of a storage account.

Parameters:

--storage-account

Pubkey

--size

Byte (accepts KB, MB, GB)

Example:

Example:

Make a storage account immutable. This is irreversible.

Parameters:

--storage-account

Pubkey

Example:

Example:

Fetch the metadata pertaining to a storage account.

Parameters:

--storage-account

Pubkey

Example:

Example:

Fetch a list of storage accounts owned by a particular pubkey. If no owner is provided, the configured signer is used.

Parameters:

--owner

Option<Pubkey>

Example:

Example:

List all the files in a storage account.

Parameters:

--storage-account

Pubkey

Example:

Example:

Get a file, assume it's text, and print it.

Parameters:

--storage-account

Pubkey

--filename

Example:

Example:

Get basic file object data from a storage account file.

Parameters:

--storage-account

Pubkey

--file

String

Example:

Example:

Delete a file from a storage account.

Parameters:

--storage-account

Pubkey

--filename

String

Example:

Example:

Edit a file in a storage account.

Parameters:

--storage-account

Pubkey

--path

PathBuf

Example:

Example:

Upload one or more files to a storage account.

Parameters:

--batch-size

usize (default: value of FILE_UPLOAD_BATCH_SIZE)

--storage-account

Pubkey

--files

Vec<PathBuf>

Example:

Example:

npm install -g @shadow-drive/clish -c "$(curl -sSfL https://release.solana.com/v1.14.3/install)"export PATH="/home/sol/.local/share/solana/install/active_release/bin:$PATH"solana-keygen new -o ~/shdw-keypair.jsonshdw-drive helpshdw-drive create-storage-account --helpshdw-drive create-storage-account -kp ~/shdw-keypair.json -n "pony storage drive" -s 1GBshdw-drive upload-file -kp ~/shdw-keypair.json -f ~/AccountHolders.csvshdw-drive upload-file -kp ~/shdw-keypair.json -f ~/AccountHolders.csv --rpc <https://some-solana-api.com>shdw-drive upload-multiple-files -kp ~/shdw-keypair.json -d ~/ponyNFT/assets/shdw-drive upload-multiple-files -kp ~/shdw-keypair.json -d ~/ponyNFT/assets/ --rpc <https://some-solana-api.com>shdw-drive edit-file --keypair ~/shdw-keypair.json --file ~/ponyNFT/01.json --url https://shdw-drive.genesysgo.net/abc123def456ghi789/0.jsonshdw-drive delete-file --keypair ~/shdw-keypair.json --url https://shdw-drive.genesysgo.net/abc123def456ghi789/0.jsonshdw-drive add-storage -kp ~/shdw-keypair.json -s 100MBshdw-drive reduce-storage -kp ~/shdw-keypair.json -s 500MBshdw-drive claim-stake -kp ~/shdw-keypair.jsonshdw-drive delete-storage-account ~/shdw-keypair.jsonshdw-drive undelete-storage-account -kp ~/shdw-keypair.jsonshdw-drive make-storage-account-immutable -kp ~/shdw-keypair.jsonshadow-drive-cli create-storage-account --name example_account --size 10MBshadow-drive-cli delete-storage-account --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli delete-storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli cancel-delete-storage-account --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli cancel-delete-storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli claim-stake --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli claim-stake FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli add-storage --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB --size 10MBshadow-drive-cli add-storage FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB 10MBshadow-drive-cli add-immutable-storage --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB --size 10MBshadow-drive-cli add-immutable-storage FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB 10MBshadow-drive-cli reduce-storage --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB --size 10MBshadow-drive-cli reduce-storage FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB 10MBshadow-drive-cli make-storage-immutable --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli make-storage-immutable FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli get-storage-account --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli get-storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli get-storage-accounts --owner FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli get-storage-accountsshadow-drive-cli list-files --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli list-files FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvBshadow-drive-cli get-text --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB --filename example.txtshadow-drive-cli get-text FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB example.txtshadow-drive-cli get-object-data --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB --file example.txtshadow-drive-cli get-object-data FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB example.txtshadow-drive-cli delete-file --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB --filename example.txtshadow-drive-cli delete-file FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB example.txtshadow-drive-cli edit-file --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB --path /path/to/new/file.txtshadow-drive-cli edit-file FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB /path/to/new/file.txtshadow-drive-cli store-files --batch-size 100 --storage-account FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB file1.txt file2.txtshadow-drive-cli store-files FKDU64ffTrQq3E1sZsNknefrvY8WkKzCpRyRfptTnyvB file1.txt file2.txt

shdwDrive is a decentralized mobile storage platform that allows you to store files securely while also providing opportunities to participate in the network as an operator.

Q: How do I start using shdwDrive? A: Getting started is simple:

Download and install the shdwDrive mobile app on your Android device

Connect your wallet

Create a storage bucket or choose a storage plan

Begin uploading your files

Q: Do I need a specific wallet to use shdwDrive? A: Yes, shdwDrive requires a Solana-compatible wallet. The app will guide you through connecting your preferred wallet during setup.

Q: What happens after I connect my wallet? A: After connecting your wallet, you'll be guided through:

A brief onboarding process

Options to create storage space

Access to the main dashboard where you can manage files and operator settings

Q: What is a bucket? A: A bucket is your personal storage space on shdwDrive where you can store and organize your files. Think of it as your private folder in the decentralized network.

Q: How do I create a bucket? A: To create a bucket:

Open the shdwDrive app

Look for the "Create Bucket" button on the home screen

Follow the prompts to set up your new storage space

Q: Is there an iOS app? A: No, not at this time. Android dominates global market share with approximately 70-75% of all smartphones worldwide, while iOS (iPhone) accounts for about 25-30%. We will complete the majority of our feature rollout on Android first, refining the user and operator experience, before moving to support the iOS device family.

Q: Why do I need to authorize each file upload separately? A: Currently, each file upload requires a separate authorization through your wallet for security purposes. This is an intentional security feature that:

Ensures proper authorization of all file operations

Prevents unauthorized bulk uploads

Maintains a clear record of file ownership

Protects your data and storage space